LINSTOR ユーザーズガイド

Please Read This First

このガイドは、software-defined storage (SDS) ソリューションである LINSTOR® のリファレンスガイドおよびハンドブックとして使用することを目的としています。

| このガイドは全体を通して、あなたがLINSTORと関連ツールの最新版を使っていると仮定します。 |

This guide is organized as follows:

-

An Introduction to LINSTOR is a foundational overview of LINSTOR and provides explanations for LINSTOR concepts and terms.

-

基本管理タスクとシステム設定 ではLINSTORの基本的な機能を扱い、一般的な管理作業を使用するための方法を提供します。この章ではそれ以外に、LINSTORの基本設定を配備するためのステップバイステップのガイドとして使用できます。

-

LINSTOR 応用タスク shows a variety of advanced and important LINSTOR tasks and configurations, so that you can use LINSTOR in more complex ways.

-

Administering LINSTOR by GUI deals with the graphical client approach to managing LINSTOR clusters that is available to LINBIT® customers.

-

LINSTOR Integrations has chapters that deal with how to implement a LINSTOR based storage solution with various platforms and technologies, such as Kubernetes, Proxmox VE, OpenNebula, Docker, OpenStack, and others, by using the LINSTOR API.

Introduction to LINSTOR

1. An Introduction to LINSTOR

To use LINSTOR® effectively, this “Introduction to LINSTOR” chapter provides an overview of the software, explains how it works and deploys storage, and introduces and explains important concepts and terms to help your understanding.

1.1. An Overview of LINSTOR

LINSTOR is an open source configuration management system, developed by LINBIT® for storage on Linux systems. It manages LVM logical volumes, ZFS ZVOLs, or both, on a cluster of nodes. It uses DRBD® for replication between different nodes and to provide block storage devices to users and applications. Some of its features include snapshots, encryption, and caching of HDD backed data in SSDs.

1.1.1. Where LINSTOR is Used

LINSTOR was originally developed to manage DRBD resources. While you can still use LINSTOR to make managing DRBD more convenient, LINSTOR has evolved and it is often integrated with software stacks higher up to provide persistent storage more easily and more flexibly than would otherwise be possible within those stacks.

LINSTOR can be used on its own or you can integrate it with other platforms, such as Kubernetes, OpenShift, OpenNebula, OpenStack, Proxmox VE, and others. LINSTOR runs on bare-metal on-premise hardware, or you can use it within virtual machines (VMs), containers, clouds, or hybrid environments.

1.1.2. LINSTOR Supported Storage and Related Technologies

LINSTOR can work with the following storage provider and related technologies:

-

LVM and LVM thin volumes

-

ZFS and ZFS thin volumes

-

File and FileThin (loop devices)

-

Diskless

-

Exos [DEPRECATED]

-

SPDK (remote)

-

Microsoft Windows Storage Spaces and thin Storage Spaces

-

EBS (target and initiator)

-

Device mapper cache (

dm-cache) and writecache (dm-writecache) -

bcache

-

LUKS

-

DRBD

By using LINSTOR, you can work with these technologies on their own or else in various meaningful combinations.

1.2. How LINSTOR Works

A working LINSTOR setup requires one active controller node that runs the LINSTOR controller software as a systemd service, linstor-controller.service. This is the LINSTOR control plane, where the LINSTOR controller node communicates with LINSTOR satellite nodes.

The setup also requires one or more satellite nodes that run the LINSTOR satellite software as a systemd service, linstor-satellite.service. The LINSTOR satellite service facilitates storage and related actions on the node, for example creating storage volumes to provide data storage to users and applications. However, satellite nodes do not have to provide physical storage to the cluster. For example, you can have diskless satellite nodes that participate in the LINSTOR cluster for DRBD quorum purposes.

| It is also possible for a node to run both the LINSTOR controller and satellite services and act in a Combined role. |

You can think of the storage technologies as implemented on LINSTOR satellite nodes, for example, DRBD replication, as the data plane. With LINSTOR, the control and data planes are separate and can function independently. This means, for example, that you can update the LINSTOR controller node or the LINSTOR controller software while your LINSTOR satellite nodes continue to provide (and replicate if using DRBD) storage to users and applications without interruption.

For convenience, a LINSTOR setup is often called a LINSTOR cluster in this guide, even though a valid LINSTOR setup can exist as an integration within another platform, such as Kubernetes.

Users can interact with LINSTOR by using either a CLI-based client or a graphical user interface (GUI). Both of these interfaces make use of the LINSTOR REST API. LINSTOR can integrate with other platforms and applications by using plugins or drivers that also make use of this API.

Communication between the LINSTOR controller and the REST API happens via TCP/IP and can be secured by using SSL/TLS.

The southbound drivers that LINSTOR uses to interface with physical storage are LVM, thinLVM and ZFS.

1.3. インストール可能コンポーネント

A LINSTOR setup has three installable components:

-

LINSTOR controller

-

LINSTOR satellite

-

LINSTOR user interfaces (LINSTOR client and LINBIT SDS GUI)

These installable components are either source code that you can compile, or else prebuilt packages, that you can use to install and run the software.

1.3.1. LINSTOR コントローラー

The linstor-controller service relies on a database that holds all the configuration information for the whole cluster. A node or container running the LINSTOR controller software is responsible for resource placement, resource configuration, and orchestration of any operational processes that require a view of the whole cluster.

Multiple controllers can be used for LINSTOR, for example, when setting up a highly available LINSTOR cluster, but only one controller can be active.

As mentioned earlier, the LINSTOR controller operates on a separate plane from the data plane that it manages. You can stop the controller service, update or reboot the controller node, and still have access to your data hosted on the LINSTOR satellite nodes. While you can still access and serve the data on your LINSTOR satellite nodes, without a running controller node, you will not be able to perform any LINSTOR status or management tasks on the satellite nodes.

1.3.2. LINSTOR サテライト

The linstor-satellite service runs on each node where LINSTOR consumes local storage or provides storage to services. It is stateless and receives all the information it needs from the node or container running the LINSTOR controller service. The LINSTOR satellite service runs programs like lvcreate and drbdadm. It acts like an agent on a node or in a container that carries out instructions that it receives from the LINSTOR controller node or container.

1.3.3. LINSTOR User Interfaces

When you need to interface with LINSTOR, you can send instructions to the active LINSTOR controller by using one of its user interfaces (UIs): the LINSTOR client, or the LINBIT SDS GUI.

Both of these UIs rely on the LINSTOR REST API.

LINSTOR Client

The LINSTOR client, linstor, is a command line utility that you can use to issue commands to the active LINSTOR controller node. These commands can be action-oriented, such as commands that create or modify storage resources in your cluster, or they can be status commands to glean information about the current state of your LINSTOR cluster.

You can use the LINSTOR client either by entering linstor followed by valid commands and arguments, or in the client’s interactive mode, by entering linstor on its own.

You can find more information about using the LINSTOR client in the LINSTORクライアントの使用 section in this user’s guide.

# linstor Use "help <command>" to get help for a specific command. Available commands: - advise (adv) - backup (b) - controller (c) - drbd-proxy (proxy) - encryption (e) - error-reports (err) - exos - file (f) - help - interactive - key-value-store (kv) - list-commands (commands, list) - node (n) - node-connection (nc) - physical-storage (ps) - remote - resource (r) - resource-connection (rc) - resource-definition (rd) - resource-group (rg) - schedule (sched) - snapshot (s) - sos-report (sos) - space-reporting (spr) - storage-pool (sp) - volume (v) - volume-definition (vd) - volume-group (vg) LINSTOR ==>

LINBIT SDS Graphical User Interface

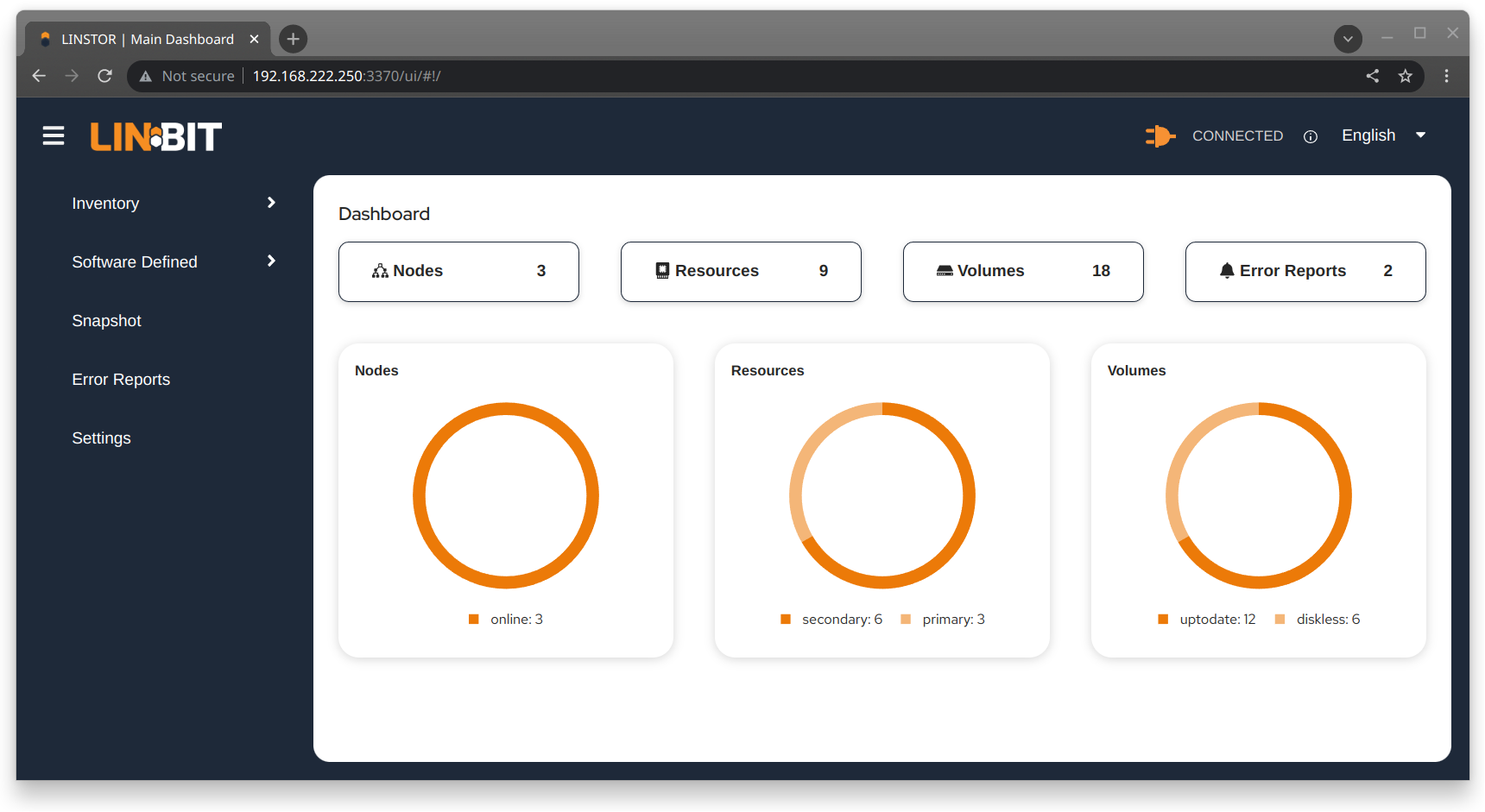

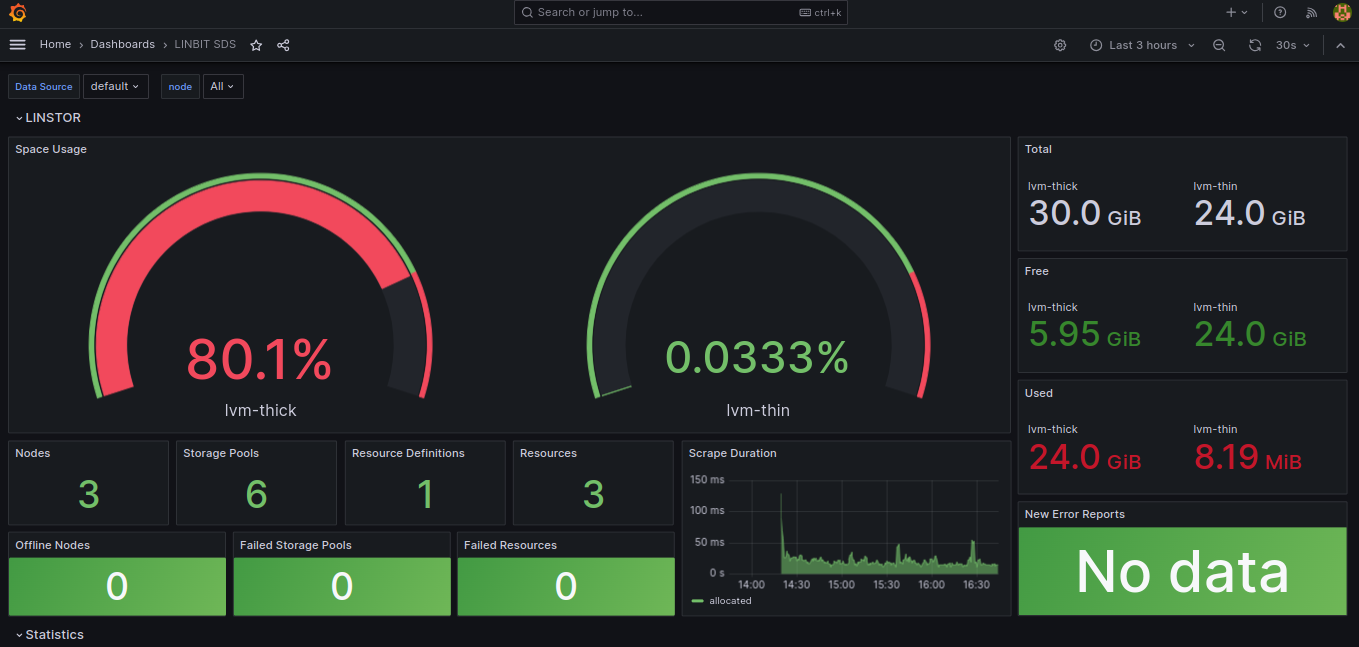

The LINBIT SDS graphical user interface (GUI) is a web-based GUI that you can use to work with LINSTOR. It can be a convenient way to navigate and get overview information about a LINSTOR cluster, or add, modify, or delete LINSTOR objects within a cluster. For example, you can add nodes, add or delete resources, or do other tasks.

You can find more information about using the GUI interface in the LINBIT SDS GUI chapter in this user’s guide.

1.4. Internal Components

The internal components of LINSTOR are abstractions of the software code that are used to describe how LINSTOR works and how you use it. Examples of internal components would be LINSTOR objects, such as resources or storage pools. Although these are abstractions, you will interact with them in a very real way as you use either the LINSTOR client or GUI to deploy and manage storage.

Along the way, this section also introduces and explains core concepts and terms that you will need to familiarize yourself with to understand how LINSTOR works and how to use it.

1.4.1. LINSTOR Objects

LINSTOR takes an object-oriented approach to software-defined storage (SDS). LINSTOR objects are the end result that LINSTOR presents to the user or application to consume or build upon.

The most commonly used LINSTOR objects are explained below and a full list of objects follows.

リソース

A resource is the LINSTOR object that represents consumable storage that is presented to applications and end users. If LINSTOR is a factory, then a resource is the finished product that it produces. Often, a resource is a DRBD replicated block device but it does not have to be. For example, a resource could be diskless to satisfy DRBD quorum requirements, or it could be an NVMe-oF or EBS initiator.

A resource has the following attributes:

-

The name of the node that the resource exists on

-

The resource definition that the resource belongs to

-

Configuration properties of the resource

ボリューム

A volume is the closest LINSTOR internal component to physical storage and is a subset of a resource. A resource can have multiple volumes. For example, you might want to have a database stored on slower storage than its transaction log in a MySQL cluster. To accomplish this by using LINSTOR, you could have one volume for the faster transaction log storage media and another for the slower database storage media, and have both under a single “MySQL” resource. By keeping multiple volumes under a single resource you are essentially creating a consistency group.

An attribute that you specify for a volume takes precedence over the same attribute if it is also specified “higher up” in the LINSTOR object hierarchy. This is because, again, a volume is the closest internal LINSTOR object to physical storage.

ノード

A Node is a server or container that participates in a LINSTOR cluster. The node object has the following attributes:

-

Name

-

IP address

-

TCP port

-

Node type (controller, satellite, combined, auxiliary)

-

Communication type (plain or SSL/TLS)

-

Network interface type

-

Network interface name

Storage Pool

A storage pool identifies storage that is assignable to other LINSTOR objects, such as LINSTOR resources, resource definitions, or resource groups, and can be consumed by LINSTOR volumes.

A storage pool defines:

-

The storage back-end driver to use for the storage pool on the cluster node, for example, LVM, thin-provisioned LVM, ZFS, and others

-

The node that the storage pool exists on

-

The storage pool name

-

Configuration properties of the storage pool

-

Additional parameters to pass to the storage pool’s back-end driver (LVM, ZFS, and others)

A List of LINSTOR Objects

LINSTOR has the following core objects:

EbsRemote |

ResourceConnection |

SnapshotVolumeDefinition |

ExternalFile |

ResourceDefinition |

StorPool |

KeyValueStore |

ResourceGroup |

StorPoolDefinition |

LinstorRemote |

S3Remote |

Volume |

NetInterface |

Schedule |

VolumeConnection |

Node |

Snapshot |

VolumeDefinition |

NodeConnection |

SnapshotDefinition |

VolumeGroup |

1.4.2. Definition and Group Objects

While definitions and groups are also LINSTOR objects, they are a special kind. Definition and group objects can be thought of as profiles or templates. These template objects are used to create child objects that will inherit their parent object’s attributes. They might also have attributes that can affect child objects but are not attributes of the child objects themselves. These attributes could be things such as the TCP port to use for DRBD replication or the volume number that a DRBD resource should use.

Definitions

Definitions define the attributes of an object. Objects created from a definition will inherit the configuration attributes defined in the definition. A definition must be created before you can create an associated child object. For example, you must create a resource definition prior to creating the corresponding resource.

There are two LINSTOR definition objects that you can create directly, by using the LINSTOR client: resource definitions and volume definitions.

- Resource definition

-

Resource definitions can define the following attributes of a resource:

-

The resource group that the resource definition belongs to

-

The name of a resource (implicitly, by virtue of the resource definition’s name)

-

The TCP port to use for the resource’s connection, for example, when DRBD is replicating data

-

Other attributes such as a resource’s storage layers, peer slots, and external name.

-

- Volume definition

-

Volume definitions can define the following:

-

The size of the storage volume

-

The volume number of the storage volume (because a resource can have multiple volumes)

-

ボリュームのメタデータプロパティ

-

The minor number to use for the DRBD device, if the volume is associated DRBD replicated storage

-

In addition to these definitions, LINSTOR has some indirect definitions: the storage pool definition, the snapshot definition, and the snapshot volume definition. LINSTOR creates these automatically when you create the respective object.

Groups

Groups are similar to definitions in that they are like profiles or templates. Where definitions apply to LINSTOR object instances, groups apply to object definitions. As the name implies, a group can apply to multiple object definitions, just as a definition can apply to multiple object instances. For example, you can have a resource group that defines resource attributes for a frequently needed storage use case. You can then use the resource group to easily spawn (create) multiple resources that need to have those attributes, without having to specify the attributes every time you create a resource.

- Resource group

-

A resource group is a parent object of a resource definition where all property changes made on a resource group will be inherited by its resource definition children[1]. The resource group also stores settings for automatic placement rules and can spawn a resource definition depending on the stored rules.

A resource group defines characteristics of its resource definition child objects. A resource spawned from the resource group, or created from a resource definition that belongs to the resource group, will be a “grandchild” object of a resource group and the “child” of a resource definition. Every resource definition that you create will be a member of the default LINSTOR resource group,

DfltRscGrp, unless you specify another resource group when creating the resource definition.Changes to a resource group will be applied to all resources or resource definitions that were created from the resource group, retroactively, unless the same characteristic has been set on a child object, for example, a resource definition or resource that was created from the resource group.

All of this makes using resource groups a powerful tool to efficiently manage a large number of storage resources. Rather than creating or modifying individual resources, you can simply configure a resource group parent, and all the child resource objects will receive the configuration.

- Volume group

-

Similarly, volume groups are like profiles or templates for volume definitions. A volume group must always reference a specific resource group. In addition, a volume group can define a volume number, and a “gross” volume size.

1.5. LINSTOR Object Hierarchy

As alluded to in previous subsections of this chapter, there is a concept of hierarchy among LINSTOR objects. Depending on the context, this can be described either as a parent-child relationship, or else as a higher-lower relationship where lower means closer to the physical storage layer[2].

A child object will inherit attributes that are defined on its parent objects, if the same attributes are not already defined on the child object. Similarly, a lower object will receive attributes that are set on higher objects, if the same attributes are not already defined on the lower object.

1.5.1. General Rules for Object Hierarchy in LINSTOR

The following are some general rules for object hierarchy in LINSTOR:

-

A LINSTOR object can only receive or inherit attributes that can be set on that object.

-

Lower objects receive attributes set on higher objects.

-

An attribute set on a lower object takes precedence over the same attribute set on higher objects.

-

Child objects inherit attributes set on parent objects.

-

An attribute set on a child object takes precedence over the same attribute set on parent objects.

1.5.2. Using Diagrams to Show Relationships Between LINSTOR Objects

This section uses diagrams to represent the hierarchical relationships between some of the most frequently used LINSTOR objects. Because of the number of LINSTOR objects and their interconnectedness, multiple diagrams are shown first rather than a single diagram, to simplify the conceptualization.

The next diagram shows the relationships between LINSTOR group objects on a single satellite node.

While the two preceding diagrams show higher-lower relationships between common LINSTOR objects, you can also think of some LINSTOR objects as having parent-child relationships. The next diagram introduces this kind of relationship between LINSTOR objects by using a storage pool definition (parent object) and a storage pool (child object) as an example. A parent object can have multiple child objects, as shown in the following diagram.

Having introduced the concept of parent-child relationships in a conceptual diagram, the next diagram is a modified version of the second diagram with some of those relationships added for groups and definitions. This modified diagram also incorporates some of the higher-lower relationships that were shown in the first diagram.

The next diagram synthesizes the relationship concepts of the preceding diagrams while also introducing new LINSTOR objects related to snapshots and connections. With the many objects and criss-crossing lines, the reason for building up to this diagram should be apparent.

Even with its seeming complexity, the preceding diagram is still a simplification and not an all-encompassing representation of the possible relationships between LINSTOR objects. As listed earlier, there are more LINSTOR objects than are shown in the diagram[3].

The good news is that you do not need to memorize the preceding diagram to work with LINSTOR. It could be useful to refer to though if you are trying to troubleshoot attributes that you have set on LINSTOR objects and their inheritance and effects on other LINSTOR objects in your cluster.

Administering LINSTOR

2. 基本管理タスクとシステム設定

This is a how-to style chapter that covers basic LINSTOR® administrative tasks, including installing LINSTOR and how to get started using LINSTOR.

2.1. Before Installing LINSTOR

Before you install LINSTOR, there are a few things that you should be aware of that might affect how you install LINSTOR.

2.1.1. パッケージ

LINSTOR は RPM と DEB 形式でパッケージングされています。

-

linstor-clientcontains the command line client program. It depends on Python which is usually already installed. In RHEL 8 systems you will need to symlinkpython. -

linstor-controllerandlinstor-satelliteBoth contain systemd unit files for the services. They depend on Java runtime environment (JRE) version 1.8 (headless) or higher.

For further details about these packages see the Installable Components section above.

| If you have a LINBIT® support subscription, you will have access to certified binaries through LINBIT customer-only repositories. |

2.1.2. FIPS Compliance

This standard shall be used in designing and implementing cryptographic modules…

You can configure LINSTOR to encrypt storage volumes, by using LUKS (dm-crypt), as detailed in the Encrypted Volumes section of this user’s guide. Refer to the LUKS and dm-crypt projects for FIPS compliance status.

You can also configure LINSTOR to encrypt communication traffic between a LINSTOR satellite and a LINSTOR controller, by using SSL/TLS, as detailed in the Secure Satellite Connections section of this user’s guide.

LINSTOR can also interface with Self-Encrypting Drives (SEDs) and you can use LINSTOR to initialize an SED drive. LINSTOR stores the drive’s password as a property that applies to the storage pool associated with the drive. LINSTOR encrypts the SED drive password by using the LINSTOR master passphrase that you must create first.

By default, LINSTOR uses the following cryptographic algorithms:

-

HMAC-SHA2-512

-

PBKDF2

-

AES-128

A FIPS compliant version of LINSTOR is available for the use cases mentioned in this section. If you or your organization require FIPS compliance at this level, contact [email protected] for details.

2.2. インストール

| コンテナで LINSTOR を使用する場合は、このセクションをスキップして、以下の Containers セクションを使用してインストールしてください。 |

2.2.1. ボリュームマネージャーのインストール

LINSTOR を使用してストレージボリュームを作成するには、LVM または ZFS のいずれかのボリュームマネージャーをシステムにインストールする必要があります。

2.2.2. LINBIT クラスターノードを管理するスクリプトの使用

If you are a LINBIT® customer, you can download a LINBIT created helper script and run it on your nodes to:

-

クラスターノードをLINBITに登録する。

-

ノードを既存の LINBIT クラスターに参加させる。

-

ノードで LINBIT パッケージ リポジトリを有効にする。

Enabling LINBIT package repositories will give you access to LINBIT software packages, DRBD® kernel modules, and other related software such as cluster managers and OCF scripts. You can then use a package manager to fetch, install, and manage updating installed packages.

Downloading the LINBIT Manage Node Script

To register your cluster nodes with LINBIT, and configure LINBIT’s repositories, first download and then run the manage node helper script by entering the following commands on all cluster nodes:

# curl -O https://my.linbit.com/linbit-manage-node.py # chmod +x ./linbit-manage-node.py # ./linbit-manage-node.py

root ユーザーとしてスクリプトを実行する必要があります。

|

このスクリプトは、https://my.linbit.com/[LINBIT カスタマーポータル] のユーザー名とパスワードの入力を求めます。資格情報を入力すると、スクリプトはアカウントに関連付けられたクラスターノードを一覧表示します (最初は何もありません)。

LINBIT パッケージ リポジトリの有効化

ノードを登録するクラスターを指定した後、プロンプトが表示されたら、スクリプトで登録データを JSON ファイルに書き込みます。次に、スクリプトは、有効または無効にできる LINBIT リポジトリのリストを表示します。 LINSTOR およびその他の関連パッケージは、drbd-9 リポジトリにあります。別の DRBD バージョンブランチを使用する必要がない限り、少なくともこのリポジトリを有効にする必要があります。

ノード管理スクリプト内の最終タスク

リポジトリの選択が完了したら、スクリプトのプロンプトに従って構成をファイルに書き込むことができます。次に、LINBIT の公開署名鍵をノードのキーリングにインストールすることに関する質問には必ず yes と答えてください。

終了する前に、スクリプトは、さまざまなユースケースにインストールできるパッケージ案に関するメッセージを表示します。

On DEB based systems you can install a precompiled DRBD kernel module package, drbd-module-$(uname -r), or a source version of the kernel module, drbd-dkms. Install one or the other package but not both.

|

2.2.3. パッケージマネージャーを使用して LINSTOR をインストール

ノードを登録し drbd-9 LINBIT パッケージリポジトリを有効にすると、DEB、RPM、または YaST2 ベースのパッケージマネージャーを使用して LINSTOR と関連コンポーネントをインストールできます。

DEB ベースのパッケージマネージャーを使用している場合は、続行する前に apt update と入力してパッケージリポジトリリストを更新します。

|

LINSTOR ストレージ用の DRBD パッケージのインストール

| If you will be using LINSTOR without DRBD, you can skip installing these packages. |

LINSTOR を使用して DRBD 複製ストレージを作成できるようにする場合は、必要な DRBD パッケージをインストールする必要があります。ノードで実行している Linux ディストリビューションに応じて、ヘルパースクリプトが提案する DRBD 関連のパッケージをインストールします。スクリプトの推奨パッケージとインストールコマンドを確認する必要がある場合は、次のように入力できます。

# ./linbit-manage-node.py --hints

2.2.4. ソースコードから LINSTOR をインストール

LINSTOR プロジェクトの GitHub ページは https://github.com/LINBIT/linstor-server です。

LINBIT には、LINSTOR、DRBD などのソースコードのダウンロード可能なアーカイブファイルもあります。 https://linbit.com/linbit-software-download-page-for-linstor-and-drbd-linux-driver/ から利用可能です。

2.3. LINSTOR のアップグレード

LINSTOR doesn’t support rolling upgrades. Controller and satellites must have the same version, otherwise the controller will discard the satellite with a VERSION_MISMATCH. But this isn’t a problem, as the satellite won’t do any actions as long it isn’t connected to a controller and DRBD will not be disrupted by any means.

If you are using the embedded default H2 database and the linstor-controller package is upgraded an automatic backup file of the database will be created in the default /var/lib/linstor directory. This file is a good restore point if for any reason a linstor-controller database migration should fail, then it is recommended to report the error to LINBIT and restore the old database file and roll back to your previous controller version.

外部データベースまたはetcdを使用する場合は、現在のデータベースを手動でバックアップして復元ポイントを作成することをお勧めします。

First, upgrade the linstor-controller and linstor-client packages on your controller host and then restart the linstor-controller. The controller should start and all of its clients should show OFFLINE(VERSION_MISMATCH). After that you can continue upgrading the linstor-satellite package on all satellite nodes and restart them, after a short reconnection time they should all show ONLINE again and your upgrade is finished.

2.4. コンテナ

LINSTORはコンテナとしても利用可能です。ベースイメージはLINBITのコンテナレジストリ drbd.io にあります。

| LINBIT’s container image repository (http://drbd.io) is only available to LINBIT customers or through LINBIT customer trial accounts. Contact LINBIT for information on pricing or to begin a trial. Alternatively, you can use LINSTOR SDS’ upstream project named Piraeus, without being a LINBIT customer. |

画像にアクセスするには、まずLINBITカスタマーポータルの認証情報を使ってレジストリにログインする必要があります。

# docker login drbd.io

このリポジトリで利用可能なコンテナは以下です。

-

drbd.io/drbd9-rhel8

-

drbd.io/drbd9-rhel7

-

drbd.io/drbd9-sles15sp1

-

drbd.io/drbd9-bionic

-

drbd.io/drbd9-focal

-

drbd.io/linstor-csi

-

drbd.io/linstor-controller

-

drbd.io/linstor-satellite

-

drbd.io/linstor-client

An up-to-date list of available images with versions can be retrieved by opening http://drbd.io in your browser. Be sure to browse the image repository through HTTP, although the registry’s images themselves are pulled through HTTPS, using the associated docker pull command.

To load the kernel module, needed only for LINSTOR satellites, you’ll need to run a drbd9-$dist container in privileged mode. The kernel module containers either retrieve an official LINBIT package from a customer repository, use shipped packages, or they try to build the kernel modules from source. If you intend to build from source, you need to have the according kernel headers (for example, kernel-devel) installed on the host. There are four ways to execute such a module load container:

-

出荷済みソースからビルドする。

-

出荷済み/ビルド済みのカーネルモジュールの使用する。

-

LINBITノードのハッシュとディストリビューションを指定する。

-

既存のリポジトリ設定をバインドマウントする。

ソースからビルドする場合(RHEL ベース):

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ -v /usr/src:/usr/src:ro \ drbd.io/drbd9-rhel7

コンテナに同梱されているモジュールを使用した例、 /usr/src は バインドマウントしません :

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ drbd.io/drbd9-rhel8

ハッシュとディストリビューションを指定する場合: (あまり使わない):

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ -e LB_DIST=rhel7.7 -e LB_HASH=ThisIsMyNodeHash \ drbd.io/drbd9-rhel7

Example using an existing repository configuration (rarely used):

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ -v /etc/yum.repos.d/linbit.repo:/etc/yum.repos.d/linbit.repo:ro \ drbd.io/drbd9-rhel7

| In both cases (hash + distribution, and bind-mounting a repository) the hash or repository configuration has to be from a node that has a special property set. Contact LINBIT customer support for help setting this property. |

For now (that is, pre DRBD 9 version “9.0.17”), you must use the containerized DRBD kernel module, as opposed to loading a kernel module onto the host system. If you intend to use the containers you should not install the DRBD kernel module on your host systems. For DRBD version 9.0.17 or greater, you can install the kernel module as usual on the host system, but you need to load the module with the usermode_helper=disabled parameter (for example, modprobe drbd usermode_helper=disabled).

|

次に、デーモンとして特権モードで LINSTOR サテライトコンテナを実行します。

# docker run -d --name=linstor-satellite --net=host -v /dev:/dev \ --privileged drbd.io/linstor-satellite

コンテナ化された drbd-utils がネットワークを通してホストカーネルと通信できるようにするには net=host が必要です。

|

LINSTOR コントローラコンテナをデーモンとして実行するには、ホスト上のポート 3370, 3376, 3377 をコンテナにマッピングします。

# docker run -d --name=linstor-controller -p 3370:3370 drbd.io/linstor-controller

コンテナ化された LINSTOR クラスタと対話するには、パッケージを介してシステムにインストールされた LINSTOR クライアント、またはコンテナ化された LINSTOR クライアントを使用することができます。 LINSTOR クライアントコンテナを使用するには

# docker run -it --rm -e LS_CONTROLLERS=<controller-host-IP-address> \ drbd.io/linstor-client node list

これ以降は、LINSTOR クライアントを使用してクラスタを初期化し、一般的な LINSTOR パターンを使用してリソースの作成を開始します。

デーモン化されたコンテナとイメージを停止して削除します。

# docker stop linstor-controller # docker rm linstor-controller

2.5. クラスタの初期化

Before initializing your LINSTOR cluster, you must meet the following prerequisites on all cluster nodes:

-

DRBD9カーネルモジュールがインストールされ、ロードされている。

-

The

drbd-utilspackage is installed. -

LVM` ツールがインストールされている。

-

linstor-controllerorlinstor-satellitepackages and their dependencies are installed on appropriate nodes. -

linstor-clientはlinstor-controllerノードにインストールされている。

コントローラがインストールされているホストで linstor-controller サービスを起動して有効にします。

# systemctl enable --now linstor-controller

2.6. LINSTORクライアントの使用

Whenever you run the LINSTOR command line client, it needs to know on which cluster node the linstor-controller service is running. If you do not specify this, the client will try to reach a locally running linstor-controller service listening on IP address 127.0.0.1 port 3370. Therefore use the linstor-client on the same host as the linstor-controller.

The linstor-satellite service requires TCP ports 3366 and 3367. The linstor-controller service requires TCP port 3370. Verify that you have this port allowed on your firewall.

|

# linstor node list

Output from this command should show you an empty list and not an error message.

You can use the linstor command on any other machine, but then you need to tell the client how to find the LINSTOR controller. As shown, this can be specified as a command line option, or by using an environment variable:

# linstor --controllers=alice node list # LS_CONTROLLERS=alice linstor node list

If you have configured HTTPS access to the LINSTOR controller REST API and you want the LINSTOR client to access the controller over HTTPS, then you need to use the following syntax:

# linstor --controllers linstor+ssl://<controller-node-name-or-ip-address> # LS_CONTROLLERS=linstor+ssl://<controller-node-name-or-ip-address> linstor node list

2.6.1. Specifying Controllers in the LINSTOR Configuration File

Alternatively, you can create the /etc/linstor/linstor-client.conf file and add a controllers= line in the global section.

[global] controllers=alice

If you have multiple LINSTOR controllers configured you can simply specify them all in a comma-separated list. The LINSTOR client will try them in the order listed.

2.6.2. Using LINSTOR Client Abbreviated Notation

You can use LINSTOR client commands in a much faster and convenient way by only entering the starting letters of the commands, subcommands, or parameters. For example, rather than entering linstor node list you can enter the LINSTOR short notation command linstor n l.

Entering the command linstor commands will show a list of possible LINSTOR client commands along with the abbreviated notation for each command. You can use the --help flag with any of these LINSTOR client commands to get the abbreviated notation for the command’s subcommands.

2.7. ノードをクラスタに追加する

After initializing your LINSTOR cluster, the next step is to add nodes to the cluster.

# linstor node create bravo 10.43.70.3

If you omit the IP address, the LINSTOR client will try to resolve the specified node name, bravo in the preceding example, as a hostname. If the hostname does not resolve to a host on the network from the system where the LINSTOR controller service is running, then LINSTOR will show an error message when you try to create the node:

Unable to resolve ip address for 'bravo': [Errno -3] Temporary failure in name resolution

2.7.1. Naming LINSTOR Nodes

If you specify an IP address when you create a LINSTOR node, you can give your node an arbitrary name. The LINSTOR client will show an INFO message about this when you create the node:

[...] 'arbitrary-name' and hostname 'node-1' doesn't match.

LINSTOR will automatically detect the created node’s local uname --nodename which will be later used for DRBD resource configurations, rather than the arbitrary node name. To avoid confusing yourself and possibly others, in most cases, it would make sense to just use a node’s hostname when creating a LINSTOR node.

2.7.2. Starting and Enabling a LINSTOR Satellite Node

When you use linstor node list LINSTOR will show that the new node is marked as offline. Now start and enable the LINSTOR satellite service on the new node so that the service comes up on reboot as well:

# systemctl enable --now linstor-satellite

About 10 seconds later you will see the status in linstor node list becoming online. Of course the satellite process might be started before the controller knows about the existence of the satellite node.

In case the node which hosts your controller should also contribute storage to the LINSTOR cluster, you have to add it as a node and also start the linstor-satellite service.

|

linstor-satellite が必要なデバイスを作成する機会が得られるまで(ブート後など)他のサービスを待機させたい場合は、対応する .service ファイルを更新し、Type=simple を Type=notify に変更します。

これにより、サテライトはコントローラーが接続し、必要なすべてのデータをサテライトに送信し、サテライトがデバイスの起動と実行を少なくとも1回試行するまで、systemd への READY=1 メッセージの送信を遅らせます。

2.7.3. Specifying LINSTOR Node Types

When you create a LINSTOR node, you can also specify a node type. Node type is a label that indicates the role that the node serves within your LINSTOR cluster. Node type can be one of controller, auxiliary, combined, or satellite. For example to create a LINSTOR node and label it as a controller and a satellite node, enter the following command:

# linstor node create bravo 10.43.70.3 --node-type combined

The --node-type argument is optional. If you do not specify a node type when you create a node, LINSTOR will use a default type of satellite.

If you want to change a LINSTOR node’s assigned type after creating the node, you can enter a linstor node modify --node-type command.

2.8. ストレージプール

StoragePools identify storage in the context of LINSTOR. To group storage pools from multiple nodes, simply use the same name on each node. For example, one valid approach is to give all SSDs one name and all HDDs another.

2.8.1. ストレージプールの作成

On each host contributing storage, you need to create either an LVM volume group (VG) or a ZFS zPool. The VGs and zPools identified with one LINSTOR storage pool name might have different VG or zPool names on the hosts, but do yourself a favor, for coherency, use the same VG or zPool name on all nodes.

# vgcreate vg_ssd /dev/nvme0n1 /dev/nvme1n1 [...]

After creating a volume group on each of your nodes, you can create a storage pool that is backed by the volume group on each of your nodes, by entering the following commands:

# linstor storage-pool create lvm alpha pool_ssd vg_ssd # linstor storage-pool create lvm bravo pool_ssd vg_ssd

ストレージプールを表示するには、次のようにします。

# linstor storage-pool list

or using LINSTOR abbreviated notation:

# linstor sp l

2.8.2. Using Storage Pools To Confine Failure Domains to a Single Back-end Device

In clusters where you have only one kind of storage and the capability to hot swap storage devices, you might choose a model where you create one storage pool per physical backing device. The advantage of this model is to confine failure domains to a single storage device.

2.8.3. 複数のノードでストレージプールを共有する

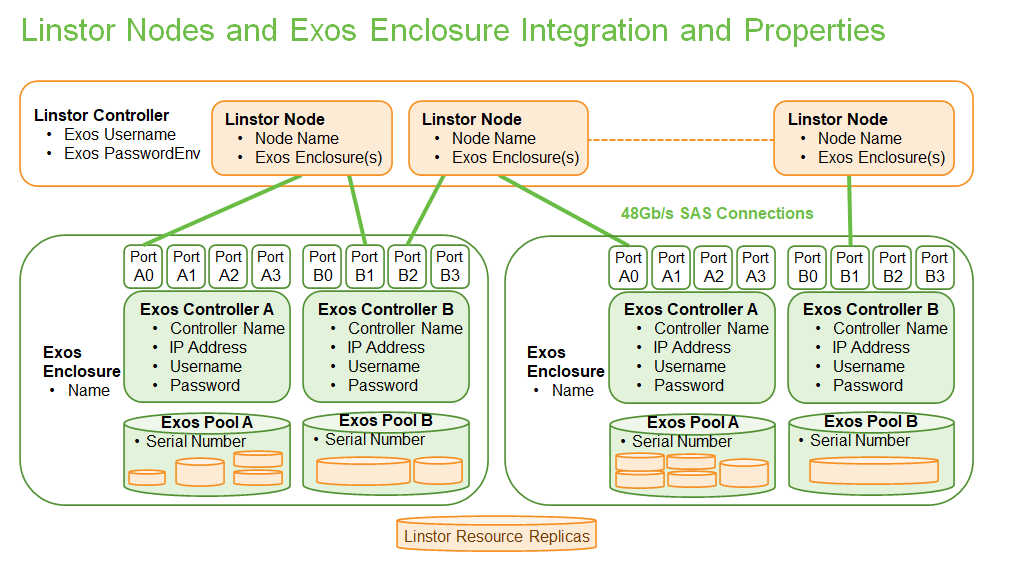

Both the Exos and LVM2 storage providers offer the option of multiple server nodes directly connected to the storage array and drives. With LVM2 the external locking service (lvmlockd) manages volume groups created with the –shared options with vgcreate. The --shared-space can be used when configuring a LINSTOR pool to use the same LVM2 volume group accessible by two or more nodes. The example below shows using the LVM2 volume group UUID as the shared space identifier for a pool accessible by nodes alpha and bravo:

# linstor storage-pool create lvm --external-locking \ --shared-space O1btSy-UO1n-lOAo-4umW-ETZM-sxQD-qT4V87 \ alpha pool_ssd shared_vg_ssd # linstor storage-pool create lvm --external-locking \ --shared-space O1btSy-UO1n-lOAo-4umW-ETZM-sxQD-qT4V87 \ bravo pool_ssd shared_vg_ssd

Exos プールは、共有スペース識別子にデフォルトで Exos プールのシリアル番号を使用します。

As of the release of linstor-server v1.26.0, the Exos integration for LINSTOR is deprecated.

|

2.8.4. Creating Storage Pools by Using the Physical Storage Command

Since linstor-server 1.5.2 and a recent linstor-client, LINSTOR can create LVM/ZFS pools on a satellite for you. The LINSTOR client has the following commands to list possible disks and create storage pools, but such LVM/ZFS pools are not managed by LINSTOR and there is no delete command, so such action must be done manually on the nodes.

# linstor physical-storage list

これはサイズと回転方式 (SSD/磁気ディスク) でグループ化された使用可能なディスクのリストを表示します。

次のフィルターを通過するディスクのみが表示されます。

-

デバイスサイズは 1GiB より大きい

-

デバイスはルートデバイス(子はなし)例:

/dev/vda,/dev/sda -

The device does not have any file system or other

blkidmarker (wipefs -amight be needed). -

デバイスは DRBD デバイスでない

create-device-pool コマンドを使用して、ディスク上に LVM プールを作成し、LINSTOR のストレージプールとして直接追加することもできます。

# linstor physical-storage create-device-pool --pool-name lv_my_pool \ LVMTHIN node_alpha /dev/vdc --storage-pool newpool

If the --storage-pool option was provided, LINSTOR will create a storage pool with the given name.

For more options and exact command usage refer to the LINSTOR client --help text.

2.8.5. Mixing Storage Pools

With some setup and configuration, you can use storage pools of different storage provider types to back a LINSTOR resource. This is called storage pool mixing. For example, you might have a storage pool on one node that uses an LVM thick-provisioned volume while on another node you have a storage pool that uses a thin-provisioned ZFS zpool.

Because most LINSTOR deployments will use homogenous storage pools to back resources, storage pool mixing is only mentioned here so that you know that the feature exists. It might be a useful feature when migrating storage resources, for example. You can find further details about this, including prerequisites, in Mixing Storage Pools of Different Storage Providers.

2.9. リソースグループを使用した LINSTOR プロビジョニングされたボリュームのデプロイ

Using resource groups to define how you want your resources provisioned should be considered the de facto method for deploying volumes provisioned by LINSTOR. Chapters that follow which describe creating each resource from a resource definition and volume definition should only be used in special scenarios.

| Even if you choose not to create and use resource groups in your LINSTOR cluster, all resources created from resource definitions and volume definitions will exist in the ‘DfltRscGrp’ resource group. |

リソースグループを使用してリソースをデプロイする簡単なパターンは次のようになります。

# linstor resource-group create my_ssd_group --storage-pool pool_ssd --place-count 2 # linstor volume-group create my_ssd_group # linstor resource-group spawn-resources my_ssd_group my_ssd_res 20G

上記のコマンドを実行すると ‘pool_ssd’ という名前のストレージプールに参加するノードから、2つに複製された 20GB ボリュームを持つ ‘my_ssd_res’ という名前のリソースが自動的にプロビジョニングされます。

より有効なパターンは、ユースケースが最適であると判断した設定でリソースグループを作成することです。もし一貫性のためボリュームの夜間オンライン検証を実行する必要があるというケースの場合、’verify-alg’ がすでに設定されたリソースグループを作成することで、グループから生成されるリソースは ‘verify-alg’ が事前設定されます。

# linstor resource-group create my_verify_group --storage-pool pool_ssd --place-count 2

# linstor resource-group drbd-options --verify-alg crc32c my_verify_group

# linstor volume-group create my_verify_group

# for i in {00..19}; do

linstor resource-group spawn-resources my_verify_group res$i 10G

done

The commands above result in twenty 10GiB resources being created each with the crc32c verify-alg pre-configured.

You can tune the settings of individual resources or volumes spawned from resource groups by setting options on the respective resource definition or volume definition LINSTOR objects. For example, if res11 from the preceding example is used by a very active database receiving many small random writes, you might want to increase the al-extents for that specific resource:

# linstor resource-definition drbd-options --al-extents 6007 res11

If you configure a setting in a resource definition that is already configured on the resource group it was spawned from, the value set in the resource definition will override the value set on the parent resource group. For example, if the same res11 was required to use the slower but more secure sha256 hash algorithm in its verifications, setting the verify-alg on the resource definition for res11 would override the value set on the resource group:

# linstor resource-definition drbd-options --verify-alg sha256 res11

| A guiding rule for the hierarchy in which settings are inherited is that the value “closer” to the resource or volume wins. Volume definition settings take precedence over volume group settings, and resource definition settings take precedence over resource group settings. |

2.11. リソース、ボリュームの作成と配備

You can use the LINSTOR create command to create various LINSTOR objects, such as resource definitions, volume definitions, and resources. Some of these commands are shown below.

In the following example scenario, assume that you have a goal of creating a resource named backups with a size of 500GiB that is replicated among three cluster nodes.

First, create a new resource definition:

# linstor resource-definition create backups

Second, create a new volume definition within that resource definition:

# linstor volume-definition create backups 500G

If you want to resize (grow or shrink) the volume definition you can do that by specifying a new size with the set-size command:

# linstor volume-definition set-size backups 0 100G

| The size of a volume definition can only be decreased if it has no associated resource. However, you can freely increase the size of a volume definition, even one having a deployed resource. |

The parameter 0 is the number of the volume in the resource backups. You have to provide this parameter because resources can have multiple volumes that are identified by a so-called volume number. You can find this number by listing the volume definitions (linstor vd l). The list table will show volume numbers for the listed resources.

So far you have only created definition objects in LINSTOR’s database. However, not a single logical volume (LV) has been created on the satellite nodes. Now you have the choice of delegating the task of deploying resources to LINSTOR or else doing it yourself.

2.11.1. リソースを手動で配置する

With the resource create command you can assign a resource definition to named nodes explicitly.

# linstor resource create alpha backups --storage-pool pool_hdd # linstor resource create bravo backups --storage-pool pool_hdd # linstor resource create charlie backups --storage-pool pool_hdd

2.11.2. DRBD リソースの自動化

When you create (spawn) a resource from a resource group, it is possible to have LINSTOR automatically select nodes and storage pools to deploy the resource to. You can use the arguments mentioned in this section to specify constraints when you create or modify a resource group. These constraints will affect how LINSTOR automatically places resources that are deployed from the resource group.

Automatically Maintaining Resource Group Placement Count

Starting with LINSTOR version 1.26.0, there is a reoccurring LINSTOR task that tries to maintain the placement count set on a resource group for all deployed LINSTOR resources that belong to that resource group. This includes the default LINSTOR resource group and its placement count.

If you want to disable this behavior, set the BalanceResourcesEnabled property to false on the the LINSTOR controller, LINSTOR resource groups that your resources belong to, or the resource definitions themselves. Due to LINSTOR object hierarchy, if you set a property on a resource group, it will override the property value on the LINSTOR controller. Likewise, if you set a property on a resource definition, it will override the property value on the resource group that the resource definition belongs to.

There are other additional properties related to this feature that you can set on the LINSTOR controller:

BalanceResourcesInterval-

The interval in seconds, at the LINSTOR controller level, that the balance resource placement task is triggered. By default, the interval is 3600 seconds (one hour).

BalanceResourcesGracePeriod-

The period in seconds for how long new resources (after being created or spawned) are ignored for balancing. By default, the grace period is 3600 seconds (one hour).

Placement Count

By using the --place-count <replica_count> argument when you create or modify a resource group, you can specify on how many nodes in your cluster LINSTOR should place diskful resources created from the resource group.

|

Creating a resource group with impossible placement constraints

You can create or modify a resource group and specify a placement count or other contraint that would be impossible for LINSTOR to fulfill. For example, you could specify a placement count of ERROR:

Description:

Not enough available nodes

[...]

|

Storage Pool Placement

In the following example, the value after the --place-count option tells LINSTOR how many replicas you want to have. The --storage-pool option should be obvious.

# linstor resource-group create backups --place-count 3 --storage-pool pool_hdd

What might not be obvious is that you can omit the --storage-pool option. If you do this, then LINSTOR can select a storage pool on its own when you create (spawn) resources from the resource group. The selection follows these rules:

-

現在のユーザがアクセスできないすべてのノードとストレージプールは無視する。

-

すべてのディスクレスストレージプールは無視する

-

十分な空き領域がないストレージプールは無視する

The remaining storage pools will be rated by different strategies.

MaxFreeSpace-

This strategy maps the rating 1:1 to the remaining free space of the storage pool. However, this strategy only considers the actually allocated space (in case of thin-provisioned storage pool this might grow with time without creating new resources)

MinReservedSpace-

Unlike the “MaxFreeSpace”, this strategy considers the reserved space. That is the space that a thin volume can grow to before reaching its limit. The sum of reserved spaces might exceed the storage pool’s capacity, which is as overprovisioning.

MinRscCount-

Simply the count of resources already deployed in a given storage pool

MaxThroughput-

For this strategy, the storage pool’s

Autoplacer/MaxThroughputproperty is the base of the score, or 0 if the property is not present. Every Volume deployed in the given storage pool will subtract its definedsys/fs/blkio_throttle_readandsys/fs/blkio_throttle_writeproperty- value from the storage pool’s max throughput. The resulting score might be negative.

各方針のスコアは正規化され、重み付けされ、合計されます。この場合、最小化方針のスコアが最初に変換され、結果として得られるスコアの全体的な最大化が可能になります。

You can configure the weights of the strategies to affect how LINSTOR selects a storage pool for resource placement when creating (spawning) resources for which you did not specify a storage pool. You do this by setting the following properties on the LINSTOR controller object. The weight can be an arbitrary decimal value.

linstor controller set-property Autoplacer/Weights/MaxFreeSpace <weight> linstor controller set-property Autoplacer/Weights/MinReservedSpace <weight> linstor controller set-property Autoplacer/Weights/MinRscCount <weight> linstor controller set-property Autoplacer/Weights/MaxThroughput <weight>

Autoplacerの動作を以前のLINSTORバージョンと互換性を保つために、重みが 1 の MaxFreeSpace を除いて、すべてのストラテジーのデフォルトの重みは0です。

|

| 0点でもマイナス点でも、ストレージプールが選択されるのを妨げることはありません。これらのスコアを持つストレージプールは、後で検討されるだけです。 |

最後に、LINSTORはすべての要件を満たすストレージプールのベストマッチングを見つけようとします。このステップでは、 --replicas-on-same, --replicas-on-different, --do-not-place-with, --do-not-place-with-regex, --layer-list, --providers などの自動配置に関する制限も考慮されます。

リソースを自動的に配置するときのリソースの併置の回避

The --do-not-place-with <resource_name_to_avoid> argument specifies that LINSTOR should try to avoid placing a resource on nodes that already have the specified, resource_name_to_avoid resource deployed.

By using the --do-not-place-with-regex <regular_expression> argument, you can specify that LINSTOR should try to avoid placing a resource on nodes that already have a resource deployed whose name matches the regular expression that you provide with the argument. In this way, you can specify multiple resources to try to avoid placing your resource with.

補助ノードプロパティを用いたリソース自動配置の制約付け

リソースの自動配置は、指定した補助ノードプロパティを持つノードにリソースを配置する(または配置しない)ように制約することができます。

| この機能は、LINSTORマネージドストレージを使用するKubernetes環境内でリソースの配置を制限しようとしている場合に、特に有用となります。例えば、Kubernetesのラベルに対応する補助ノードのプロパティを設定することができます。このユースケースの詳細については、「LINSTOR Volumes in Kubernetes」LINSTOR User’s Guide の章にある “replicasOnSame” sectionをご覧ください。 |

引数の --replicas-on-same と --replicas-on-different は、 Aux/ 名前空間内のプロパティの名前を指定します。

The following example shows setting an auxiliary node property, testProperty, on three LINSTOR satellite nodes. Next, you create a resource group, testRscGrp, with a placement count of two and a constraint to place spawned resources on nodes that have a testProperty value of 1. After creating a volume group, you can spawn a resource from the resource group. For simplicity, output from the following commands is not shown.

# for i in {0,2}; do linstor node set-property --aux node-$i testProperty 1; done

# linstor node set-property --aux node-1 testProperty 0

# linstor resource-group create testRscGrp --place-count 2 --replicas-on-same testProperty=1

# linstor volume-group create testRscGrp

# linstor resource-group spawn-resources testRscGrp testResource 100M

以下のコマンドで、生成されたリソースの配置を確認することができます。

|=====================================================================================| # linstor resource list +-------------------------------------------------------------------------------------+ | ResourceName | Node | Port | Usage | Conns | State | CreatedOn | | testResource | node-0 | 7000 | Unused | Ok | UpToDate | 2022-07-27 16:14:16 | | testResource | node-2 | 7000 | Unused | Ok | UpToDate | 2022-07-27 16:14:16 | +-------------------------------------------------------------------------------------+

Because of the --replicas-on-same constraint, LINSTOR did not place the spawned resource on

satellite node node-1, because the value of its auxiliary node property, testProperty was

0 and not 1.

You can verify the node properties of node-1, by using the list-properties command:

# linstor node list-properties node-1 +----------------------------+ | Key | Value | |============================| | Aux/testProperty | 0 | | CurStltConnName | default | | NodeUname | node-1 | +----------------------------+

LINSTORレイヤーまたはストレージプールプロバイダによるリソースの自動配置の制約

You can specify the --layer-list or --providers arguments, followed by a comma-separated values (CSV) list of LINSTOR layers or storage pool providers, to influence where LINSTOR places resources. The possible layers and storage pool providers that you can specify in your CSV list can be shown by using the --help option with the --auto-place option. A CSV list of layers would constrain automatic resource placement for a specified resource group to nodes that have storage that conformed with your list. Consider the following command:

# linstor resource-group create my_luks_rg --place-count 3 --layer-list drbd,luks

Resources that you might later create (spawn) from this resource group would be deployed across three nodes having storage pools backed by a DRBD layer backed by a LUKS layer (and implicitly backed by a “storage” layer). The order of layers that you specify in your CSV list is “top-down”, where a layer on the left in the list is above a layer on its right.

The --providers argument can be used to constrain automatic resource placement to only storage

pools that match those in a specified CSV list. You can use this argument to have explicit

control over which storage pools will back your deployed resource. If for example, you had a

mixed environment of ZFS, LVM, and LVM_THIN storage pools in your cluster, by using the

--providers LVM,LVM_THIN argument, you can specify that a resource only gets backed by either

an LVM or LVM_THIN storage pool, when using the --place-count option.

The --providers argument’s CSV list does not specify an order of priority for the list

elements. Instead, LINSTOR will use factors like additional placement constraints,

available free space, and LINSTOR’s storage pool selection strategies that were previously

described, when placing a resource.

|

Automatically Placing Resources When Creating Them

While using resource groups to create templates from which you can create (spawn) resources from is the standard way to create resources, you can also create resources directly by using the resource create command. When you use this command, it is also possible to specify arguments that affect how LINSTOR will place the resource in your storage cluster.

With the exception of the placement count argument, the arguments that you can specify when you use the resource create command that affect where LINSTOR places the resource are the same as those for the resource-group create command. Specifying an --auto-place <replica_count> argument with a resource create command is the same as specifying a --place-count <replica_count> argument with a resource-group create command.

Using Auto-place to Extend Existing Resource Deployments

Besides the argument name, there is another difference between the placement count argument for the resource group and resource create commands. With the resource create command, you can also specify a value of +1 with the --auto-place argument, if you want to extend existing resource deployments.

By using this value, LINSTOR will create an additional replica, no matter what the --place-count is configured for on the resource group that the resource was created from.

For example, you can use an --auto-place +1 argument to deploy an additional replica of the testResource resource used in a previous example. You will first need to set the auxiliary node property, testProperty to 1 on node-1. Otherwise, LINSTOR will not be able to deploy the replica because of the previously configured --replicas-on-same constraint. For simplicity, not all output from the commands below is shown.

# linstor node set-property --aux node-1 testProperty 1 # linstor resource create --auto-place +1 testResource # linstor resource list +-------------------------------------------------------------------------------------+ | ResourceName | Node | Port | Usage | Conns | State | CreatedOn | |=====================================================================================| | testResource | node-0 | 7000 | Unused | Ok | UpToDate | 2022-07-27 16:14:16 | | testResource | node-1 | 7000 | Unused | Ok | UpToDate | 2022-07-28 19:27:30 | | testResource | node-2 | 7000 | Unused | Ok | UpToDate | 2022-07-27 16:14:16 | +-------------------------------------------------------------------------------------+

警告: +1 という値は resource-group create --place-count コマンドでは有効ではありません。

これは、このコマンドはリソースをデプロイせず、後でデプロイするためのテンプレートを作成する

だけだからです。

2.12. リソース、リソース定義、およびリソース グループの削除

削除したい LINSTOR オブジェクトの後に delete コマンドを使用して、LINSTOR リソース、リソース定義、およびリソース グループを削除できます。どのオブジェクトを削除するかによって、LINSTOR クラスターおよびその他の関連する LINSTOR オブジェクトに異なる影響があります。

2.12.1. リソース定義の削除

次のコマンドを使用してリソース定義を削除できます:

# linstor resource-definition delete <resource_definition_name>

これにより、指定されたリソース定義が LINSTOR クラスター全体から削除されます。リソースはすべてのノードから削除され、リソース エントリは LINSTOR のデータベース テーブルから削除するようにマークされます。LINSTOR がすべてのノードからリソースを削除した後、リソース エントリは LINSTOR のデータベース テーブルから削除されます。

| If your resource definition has existing snapshots, you will not be able to delete the resource definition until you delete its snapshots. See the スナップショットの削除 section in this guide. |

2.12.2. リソースの削除

次のコマンドを使用してリソースを削除できます:

# linstor resource delete <node_name> <resource_name>

リソース定義の削除とは異なり、このコマンドは、指定したノード (複数可) から LINSTOR リソースのみを削除します。リソースはノードから削除され、リソースエントリは LINSTOR のデータベーステーブルから削除するようにマークされます。LINSTOR がノードからリソースを削除した後、リソースエントリは LINSTOR のデータベーステーブルから削除されます。

Deleting a LINSTOR resource might have implications for a cluster, beyond just removing the resource. For example, if the resource is backed by a DRBD layer, removing a resource from one node in a three node cluster could also remove certain quorum related DRBD options, if any existed for the resource. After removing such a resource from a node in a three node cluster, the resource would no longer have quorum as it would now only be deployed on two nodes in the three node cluster.

「linstor resource delete」コマンドを実行して単一ノードからリソースを削除すると、次のような情報メッセージが表示される場合があります。

INFO:

Resource-definition property 'DrbdOptions/Resource/quorum' was removed as there are not enough resources for quorum

INFO:

Resource-definition property 'DrbdOptions/Resource/on-no-quorum' was removed as there are not enough resources for quorum

また、リソース定義の削除とは異なり、リソースのストレージプールのスナップショットが存在している間にリソースを削除できます。リソースのストレージプールの既存のスナップショットは保持されます。

2.12.3. リソースグループの削除

次のコマンドを使用して、リソースグループを削除できます。

# linstor resource-group delete <resource_group_name>

このコマンドは指定されたリソースグループを削除します。リソース定義が関連付けられていない場合のみ、リソースグループを削除できます。削除しない場合、LINSTOR は次のようなエラー メッセージを表示します。

ERROR:

Description:

Cannot delete resource group 'my_rg' because it has existing resource definitions.

リソースグループを削除できるようにこのエラーを解決するには、関連するリソース定義を削除するか、リソース定義を別の (既存の) リソースグループに移動します。

# linstor resource-definition modify <resource_definition_name> \ --resource-group <another_resource_group_name>

次のコマンドを入力すると、リソース グループに関連付けられているリソース定義を見つけることができます。

# linstor resource-definition list

2.13. Backup and Restore Database

Since version 1.24.0, LINSTOR has a tool that you can use to export and import a LINSTOR database.

This tool has an executable file called /usr/share/linstor-server/bin/linstor-database. This executable has two subcommands, export-db and import-db. Both subcommands accept an optional --config-directory argument that you can use to specify the directory containing the linstor.toml configuration file.

| To ensure a consistent database backup, take the controller offline by stopping the controller service as shown in the commands below, before creating a backup of the LINSTOR database. |

2.13.1. Backing Up the Database

To backup the LINSTOR database to a new file named db_export in your home directory, enter the following commands:

# systemctl stop linstor-controller # /usr/share/linstor-server/bin/linstor-database export-db ~/db_export # systemctl start linstor-controller

You can use the --config-directory argument with the linstor-database utility to

specify a LINSTOR configuration directory if needed. If you omit this argument, the utility uses

the /etc/linstor directory by default.

|

After backing up the database, you can copy the backup file to a safe place.

# cp ~/db_export <somewhere safe>

The resulting database backup is a plain JSON document, containing not just the actual data, but also some metadata about when the backup was created, from which database, and other information.

2.13.2. Restoring the Database From a Backup

Restoring the database from a previously made backup is similar to export-db from the previous section.

For example, to restore the previously made backup from the db_export file, enter the following commands:

# systemctl stop linstor-controller # /usr/share/linstor-server/bin/linstor-database import-db ~/db_export # systemctl start linstor-controller

You can only import a database from a previous backup if the currently installed version of LINSTOR is the same (or higher) version as the version that you created the backup from. If the currently installed LINSTOR version is higher than the version that the database backup was created from, when you import the backup the data will be restored with the same database scheme of the version used during the export. Then, the next time that the controller starts, the controller will detect that the database has an old scheme and it will automatically migrate the data to the scheme of the current version.

2.13.3. Converting Databases

Since the exported database file contains some metadata, an exported database file can be imported into a different database type than it was exported from.

This allows the user to convert, for example, from an etcd setup to an SQL based setup. There is no special command for converting the database format. You only have to specify the correct linstor.toml configuration file by using the --config-directory argument (or updating the default /etc/linstor/linstor.toml and specifying the database type that you want to use before importing). See the LINSTOR User’s Guide for more information about specifying a database type. Regardless of the type of database that the backup was created from, it will be imported in the database type that is specified in the linstor.toml configuration file.

3. LINSTOR 応用タスク

3.1. 高可用性 LINSTOR クラスターの作成

By default a LINSTOR cluster consists of exactly one active LINSTOR controller node. Making LINSTOR highly available involves providing replicated storage for the controller database, multiple LINSTOR controller nodes where only one is active at a time, and a service manager (here DRBD Reactor) that takes care of mounting and unmounting the highly available storage as well as starting and stopping the LINSTOR controller service on nodes.

3.1.1. 高可用性ストレージの構成

For configuring the highly available (HA) storage, you can use LINSTOR itself. One of the benefits of having the storage under LINSTOR control is that you can easily extend the HA storage to new cluster nodes.

First, create a new resource that is 200MiB in size and set the necessary DRBD options, as shown in the example commands below. You will need to adapt the storage pool name to match an existing storage pool in your environment.

# linstor resource-definition create linstor_db # linstor rd drbd-options --auto-promote=no linstor_db # linstor rd drbd-options --quorum=majority linstor_db # linstor rd drbd-options --on-suspended-primary-outdated=force-secondary linstor_db # linstor rd drbd-options --on-no-quorum=io-error linstor_db # linstor rd drbd-options --on-no-data-accessible=io-error linstor_db # linstor rd drbd-options --rr-conflict=retry-connect linstor_db # linstor volume-definition create linstor_db 200M # linstor resource create linstor_db --storage-pool pool1 --auto-place 3

It is crucial that your cluster qualifies for auto-quorum and uses the io-error policy (see

Section [s-linstor-auto-quorum]), and that auto-promote is disabled.

From now on it is assumed that the resource’s name is linstor_db. After creating the

linstor_db resource, you can move the LINSTOR database to the new storage and create a

systemd mount service. First, stop the current controller service and disable it, as it will

be managed by DRBD Reactor later.

# systemctl disable --now linstor-controller

Next, create the systemd mount service.

# cat << EOF > /etc/systemd/system/var-lib-linstor.mount

[Unit]

Description=Filesystem for the LINSTOR controller

[Mount]

# you can use the minor like /dev/drbdX or the udev symlink What=/dev/drbd/by-res/linstor_db/0 Where=/var/lib/linstor EOF

# mv /var/lib/linstor{,.orig} # mkdir /var/lib/linstor # chattr +i /var/lib/linstor # only if on LINSTOR >= 1.14.0 # drbdadm primary linstor_db # mkfs.ext4 /dev/drbd/by-res/linstor_db/0 # systemctl start var-lib-linstor.mount # cp -r /var/lib/linstor.orig/* /var/lib/linstor # systemctl start linstor-controller

Copy the /etc/systemd/system/var-lib-linstor.mount mount file to all the cluster nodes that

you want to have the potential to run the LINSTOR controller service (standby controller nodes).

Again, do not systemctl enable any of these services because DRBD Reactor will manage them.

3.1.2. 複数のLINSTORコントローラー

The next step is to install LINSTOR controllers on all nodes that have access to the linstor_db DRBD resource (as they need to mount the DRBD volume) and which you want to become a possible LINSTOR controller. It is important that the controllers are manged by drbd-reactor, so verify that the linstor-controller.service is disabled on all nodes! To be sure, execute systemctl disable linstor-controller on all cluster nodes and systemctl stop linstor-controller on all nodes except the one it is currently running from the previous step. Also verify that you have set chattr +i /var/lib/linstor on all potential controller nodes if you use LINSTOR version equal or greater to 1.14.0.

3.1.3. サービスの管理

For starting and stopping the mount service and the linstor-controller service, use DRBD Reactor. Install this component on all nodes that could become a LINSTOR controller and edit their /etc/drbd-reactor.d/linstor_db.toml configuration file. It should contain an enabled promoter plugin section like this:

[[promoter]] id = "linstor_db" [promoter.resources.linstor_db] start = ["var-lib-linstor.mount", "linstor-controller.service"]

Depending on your requirements you might also want to set an on-stop-failure action and set

stop-services-on-exit.

その後 drbd-reactor を再起動し、構成したすべてのノードで有効にします。

# systemctl restart drbd-reactor # systemctl enable drbd-reactor

Check that there are no warnings from drbd-reactor service in the logs by running systemctl

status drbd-reactor. As there is already an active LINSTOR controller things will just stay

the way they are. Run drbd-reactorctl status linstor_db to check the health of the linstor_db

target unit.

The last but nevertheless important step is to configure the LINSTOR satellite services to not

delete (and then regenerate) the resource file for the LINSTOR controller DB at its startup. Do

not edit the service files directly, but use systemctl edit. Edit the service file on all

nodes that could become a LINSTOR controller and that are also LINSTOR satellites.

# systemctl edit linstor-satellite [Service] Environment=LS_KEEP_RES=linstor_db

After this change you should execute systemctl restart linstor-satellite on all satellite

nodes.

| Be sure to configure your LINSTOR client for use with multiple controllers as described in the section titled, LINSTORクライアントの使用 and verify that you also configured your integration plugins (for example, the Proxmox plugin) to be ready for multiple LINSTOR controllers. |

3.2. DRBDクライアント

By using the --drbd-diskless option instead of --storage-pool you can have a permanently diskless DRBD device on a node. This means that the resource will appear as block device and can be mounted to the file system without an existing storage-device. The data of the resource is accessed over the network on another node with the same resource.

# linstor resource create delta backups --drbd-diskless

The option --diskless is deprecated. Use the --drbd-diskless or --nvme-initiator

commands instead.

|

3.3. LINSTOR – DRBDコンシステンシグループ/マルチボリューム

The so called consistency group is a feature from DRBD. It is mentioned in this user’s guide, due to the fact that one of LINSTOR’s main functions is to manage storage-clusters with DRBD. Multiple volumes in one resource are a consistency group.

これは、1つのリソースで異なるボリュームに対する変更が他のサテライトでも同じ順番で複製されることを意味します。

したがって、リソース内の異なるボリュームに相互依存データがある場合は、タイミングを気にする必要はありません。

LINSTORリソースに複数のボリュームを配備するには、同じ名前の volume-definitions を2つ作成する必要があります。

# linstor volume-definition create backups 500G # linstor volume-definition create backups 100G

3.4. 1つのリソースのボリュームを異なるストレージプールに配置

リソースをノードに配備する前のボリューム定義で StorPoolName プロパティを使うことで、1つのリソースから異なるストレージプールへのボリュームを作成できます。

# linstor resource-definition create backups # linstor volume-definition create backups 500G # linstor volume-definition create backups 100G # linstor volume-definition set-property backups 0 StorPoolName pool_hdd # linstor volume-definition set-property backups 1 StorPoolName pool_ssd # linstor resource create alpha backups # linstor resource create bravo backups # linstor resource create charlie backups

Since the volume-definition create command is used without the --vlmnr option LINSTOR

assigned the volume numbers starting at 0. In the following two lines the 0 and 1 refer to these

automatically assigned volume numbers.

|

Here the ‘resource create’ commands do not need a --storage-pool option. In this case LINSTOR

uses a ‘fallback’ storage pool. Finding that storage pool, LINSTOR queries the properties of the

following objects in the following order:

-

Volume definition

-

Resource

-

Resource definition

-

Node

If none of those objects contain a StorPoolName property, the controller falls back to a

hard-coded ‘DfltStorPool’ string as a storage pool.

This also means that if you forgot to define a storage pool prior deploying a resource, you will get an error message that LINSTOR could not find the storage pool named ‘DfltStorPool’.

3.5. DRBDを使わないLINSTOR

LINSTORはDRBDを使わずとも使用できます。 DRBDがなくても、LINSTORはLVMおよびZFS 下位ストレージプールからボリュームをプロビジョニングし、LINSTORクラスタ内の個々のノードにそれらのボリュームを作成できます。

現在LINSTORはLVMとZFSボリュームの作成をサポートしており、LUKS、DRBD、または NVMe-oF/NVMe-TCP の組み合わせをそれらのボリュームの上に重ねることもできます。

For example, assume you have a Thin LVM backed storage pool defined in your LINSTOR cluster named, thin-lvm:

# linstor --no-utf8 storage-pool list +--------------------------------------------------------------+ | StoragePool | Node | Driver | PoolName | ... | |--------------------------------------------------------------| | thin-lvm | linstor-a | LVM_THIN | drbdpool/thinpool | ... | | thin-lvm | linstor-b | LVM_THIN | drbdpool/thinpool | ... | | thin-lvm | linstor-c | LVM_THIN | drbdpool/thinpool | ... | | thin-lvm | linstor-d | LVM_THIN | drbdpool/thinpool | ... | +--------------------------------------------------------------+

You could use LINSTOR to create a Thin LVM on linstor-d that’s 100GiB in size using the

following commands:

# linstor resource-definition create rsc-1

# linstor volume-definition create rsc-1 100GiB

# linstor resource create --layer-list storage \

--storage-pool thin-lvm linstor-d rsc-1

You should then see you have a new Thin LVM on linstor-d. You can extract the device path from

LINSTOR by listing your linstor resources with the --machine-readable flag set:

# linstor --machine-readable resource list | grep device_path

"device_path": "/dev/drbdpool/rsc-1_00000",

If you wanted to layer DRBD on top of this volume, which is the default --layer-list option in

LINSTOR for ZFS or LVM backed volumes, you would use the following resource creation pattern

instead:

# linstor resource-definition create rsc-1

# linstor volume-definition create rsc-1 100GiB

# linstor resource create --layer-list drbd,storage \

--storage-pool thin-lvm linstor-d rsc-1

You would then see that you have a new Thin LVM backing a DRBD volume on linstor-d:

# linstor --machine-readable resource list | grep -e device_path -e backing_disk

"device_path": "/dev/drbd1000",

"backing_disk": "/dev/drbdpool/rsc-1_00000",

次の表は、どのレイヤーの後にどの子レイヤーが続くかを示しています。

[cols=”>1,<5″] |

Layer |

Child layer |

DRBD |

CACHE, WRITECACHE, NVME, LUKS, STORAGE |

CACHE |

WRITECACHE, NVME, LUKS, STORAGE |

WRITECACHE |

CACHE, NVME, LUKS, STORAGE |

NVME |

CACHE, WRITECACHE, LUKS, STORAGE |

LUKS |

STORAGE |

STORAGE |

– |

| One layer can only occur once in the layer list. |

luks レイヤの必要条件についての情報はこのユーザガイドの暗号化ボリュームの節を参照ください。

|

3.5.1. NVMe-oF/NVMe-TCP LINSTOR レイヤ

NVMe-oF/NVMe-TCP allows LINSTOR to connect diskless resources to a node with the same resource where the data is stored over NVMe fabrics. This leads to the advantage that resources can be mounted without using local storage by accessing the data over the network. LINSTOR is not using DRBD in this case, and therefore NVMe resources provisioned by LINSTOR are not replicated, the data is stored on one node.

NVMe-oF only works on RDMA-capable networks and NVMe-TCP on networks that can carry IP traffic. You can use tools such as lshw or ethtool to verify the capabilities of your network adapters.

|

To use NVMe-oF/NVMe-TCP with LINSTOR the package nvme-cli needs to be installed on every node which acts as a satellite and will use NVMe-oF/NVMe-TCP for a resource. For example, on a DEB-based system, to install the package, enter the following command:

# apt install nvme-cli

If you are not on a DEB-based system, use the suitable command for installing packages on your operating system, for example, on SLES: zypper; on RPM-based systems: dnf.

|

NVMe-oF/NVMe-TCP を使用するリソースを作成するには、resource-definition を作成するときに追加のパラメータを指定する必要があります。

# linstor resource-definition create nvmedata -l nvme,storage

DRBDが使用されている場合、デフォルトでは -l(layer-stack) パラメータは drbd,storage に設定されています。 NVMeもDRBDも使用せずにLINSTORリソースを作成したい場合は、 -l パラメータを storage だけに設定する必要があります。

|

デフォルトの NVMe-oF の代わりに NVMe-TCP を使用するには、次のプロパティを設定する必要があります。

# linstor resource-definition set-property nvmedata NVMe/TRType tcp

プロパティ NVMe/TRType は、リソースグループまたはコントローラーレベルで設定することもできます。

Next, create the volume-definition for your resource:

# linstor volume-definition create nvmedata 500G

ノード上にリソースを作成する前に、データがローカルに格納される場所と、どのノードがネットワーク経由でそれにアクセスするかを確認します。

First, create the resource on the node where your data will be stored:

# linstor resource create alpha nvmedata --storage-pool pool_ssd

ネットワーク上でリソースデータにアクセスするノードでは、リソースをディスクレスとして定義する必要があります。

# linstor resource create beta nvmedata --nvme-initiator

これでリソース nvmedata をノードの1つにマウントできます。

| ノードに複数のNICがある場合は、NVMe-of/NVME-TCP に対してそれらの間の経路を強制する必要があります。そうしないと、複数のNICで問題が発生する可能性があります。 |

3.5.2. 書き込みキャッシュレイヤー

DM-Writecache デバイスは、ストレージデバイスとキャッシュデバイスの2つのデバイスで構成されます。 LINSTORはそのような書き込みキャッシュデバイスをセットアップできますが、ストレージプールやキャッシュデバイスのサイズなどの追加情報が必要です。

# linstor storage-pool create lvm node1 lvmpool drbdpool # linstor storage-pool create lvm node1 pmempool pmempool # linstor resource-definition create r1 # linstor volume-definition create r1 100G # linstor volume-definition set-property r1 0 Writecache/PoolName pmempool # linstor volume-definition set-property r1 0 Writecache/Size 1% # linstor resource create node1 r1 --storage-pool lvmpool --layer-list WRITECACHE,STORAGE

The two properties set in the examples are mandatory, but can also be set on controller level which would act as a default for all resources with WRITECACHE in their --layer-list. However, note that the Writecache/PoolName refers to the corresponding node. If the node does not have a storage pool named pmempool you will get an error message.