The TCP transport is a streaming protocol, and for nearly all Linux setups, means that the Linux Kernel takes care to deliver the messages in order and without losing any data. [While specialized hardware is available and helps by calculating the TCP checksum, we have seen that these can cause more problems than they solve.]

The TCP transport has to copy the supplied data into some buffers to send these messages, which takes time. Yes, zero copy-send solutions exist, but on the receiving side, the fragments have to be accumulated, sorted, and merged into buffers so that the storage systems (harddisks or SSD) can do their DMA from continuous 4KiB pages.

These internal copy functions moving into and out of buffers cause one of the major bottlenecks for network IO. You can start to see the performance degradation in the 10GBit/sec performance range; it continues to severely limit performance from there on. In addition, all these copy functions create and cause higher latency, affecting that significant IOPS number. We talk about this in our user guide: Latency vs. IOPs.

How Does RDMA change everything?

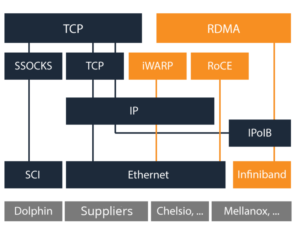

In contrast, Remote Direct Memory Access (RDMA) gives network hardware the ability to directly move data from RAM in one machine to RAM in another without involving the CPU (apart from specifying what should be transferred). It comes in various forms and implementations (Infiniband, iWarp, RoCE) and with different on-wire protocols (some use IP, can therefore be routed, and so could be seen as “just” an advanced offload engine).

The common and important point is that the sender and receiver do not have to split the data up into MTU-sized chunks. Nor joining it back together (to get a single, aligned, 4KiB page that can be transmitted to storage, for example) – they specify “here are 16 pages of 4kiB, please store data coming from this channel into these next time” and “please push those 32KiB across this channel“. This means actual zero-copy send and receive and much lower latency.

Some hardware allows splitting the physical device into multiple virtual ones; this feature is called SR-IOV. It means that a VM can push memory pages directly to another machine without involving the hypervisor OS or copying data around. Needless to say that this improves performance quite a bit, as compared to cutting data into pieces and moving them through the hypervisor.

RoCE – RDMA over Converged Ethernet

RDMA over Converged Ethernet (RoCE) is a network protocol that uses the power of RDMA to speed up the communication between applications on clusters, which are hosted on clusters of servers and storage arrays. RoCE allows devices to directly transfer data from memory to memory without involving the host CPU.

Instead, the transport processing and memory translation, and placement are done by hardware. This lowers latency and increases performance compared to other software protocols. Some of the most popular uses include private cloud environments, as the reduced drag on the CPU can be used on running virtual machines. Other uses include storage, data warehousing and financial services.

Should I use it? And what are the benefits?

As mentioned earlier, the advantage of using RDMA over other software-based protocols is that you avoid the bottleneck of putting too many tasks on a CPU during data transfer. This is greatly advantageous when you’re running large data sets or complex processes – a good example of such is machine learning.

The first advantage of that is that you increase the speed, lowers latency and increase performance significantly. Another advantage is that you free up CPU power which can be used for other tasks. The increased efficiency is ingrained in the technology, as well as the lower total costs of ownership, such as reducing the power consumption and longer life on physical hardware.

RDMA variants such as Ethernet Infiniband are quite common and they can provide speeds of 10 to 100 Gigabit per second.

As with everything though, RDMA has its downsides. These include the high level of investments which are usually necessary to run an RDMA. There are lots of requirements for RDMA to run, the most expensive being the NICs and network switches that support RDMA. All systems on the same network must support the protocol.

Where is it used?

Anywhere low latency and high throughput are advantageous. RDMA skips the CPU, which can become a bottleneck in busy storage networks. Some of the most

iSER (iSCSI Extensions for RDMA) uses RDMA to more efficiently transport data between an iSCSI target and initiator.

NVMe-oF (NVMe over Fabrics) can transport data more efficiently between a target initiator than its predecessor iSCSI could, and even more efficiently than that when using RDMA. NVMe-oF as a protocol can be layered over Fibre Channel or Ethernet, making it

LINBIT’s DRBD also has an RDMA module that can make its synchronous replication more efficient. More often than not, DRBD is used in hyper-converged clusters where storage is directly attached to compute nodes, which means that there’s an application running on the same host as DRBD. RDMA would ensure DRBD and the application are not contending for CPU time.

How to set it up?

RHEL Installation:

Run the following installation commands on all servers:

# yum -y groupinstall "InfiniBand Support"

# yum -y install perftest infiniband-diagsMake sure that RDMA is enabled on boot.

# dracut --add-drivers "mlx4_en mlx4_ib mlx5_ib" -f

# systemctl enable rdmaUbuntu Installation:

Run the following installation commands on all servers:

# apt-get install libmlx4-1 infiniband-diags ibutils ibverbs-utils rdmacm-utils perftestIn order to tell DRBD to use RDMA, follow the instructions in the DRBD User’s Guide.